Abstract

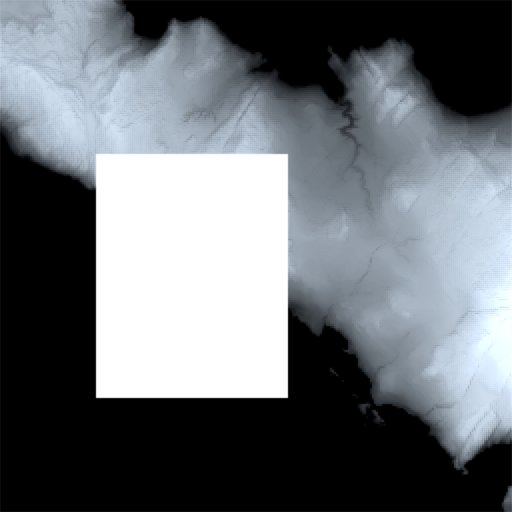

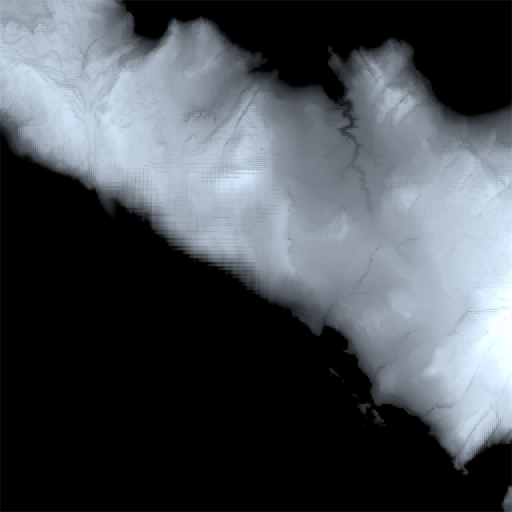

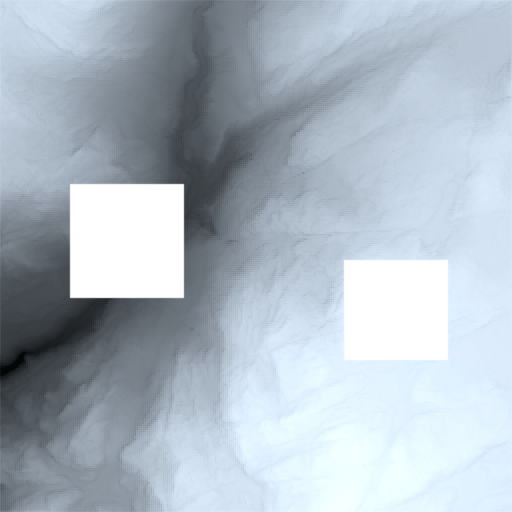

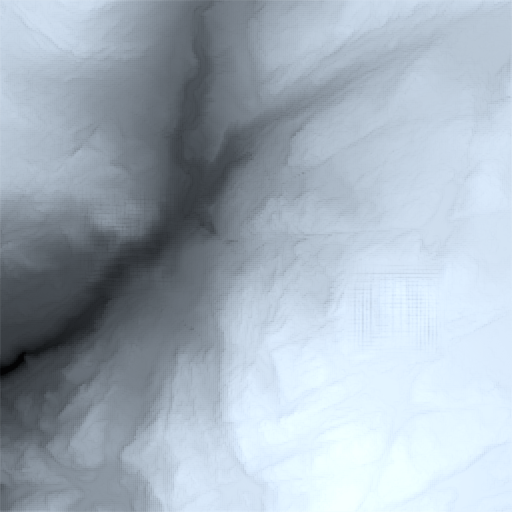

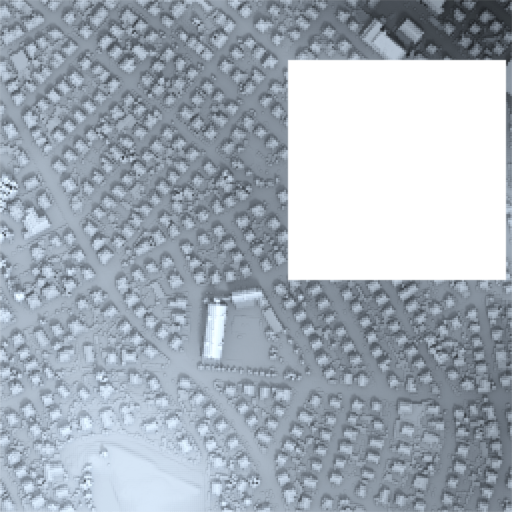

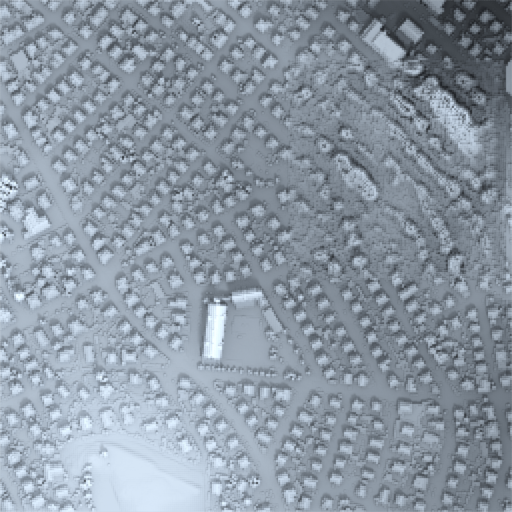

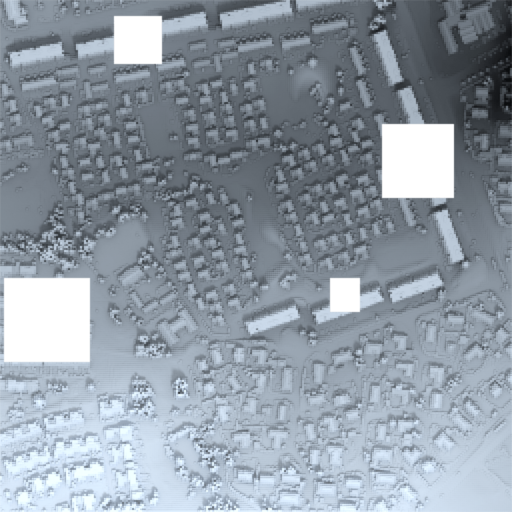

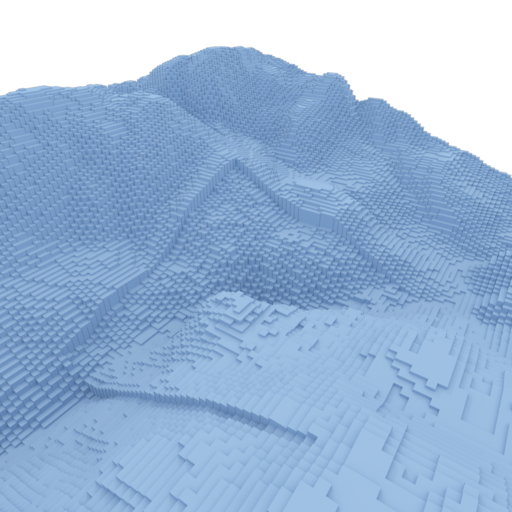

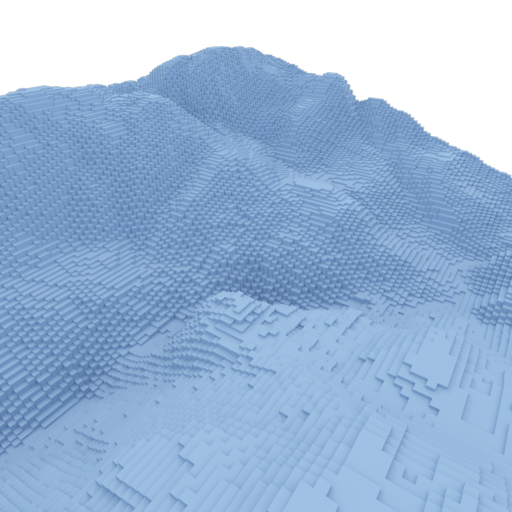

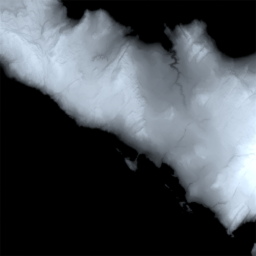

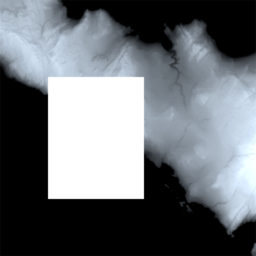

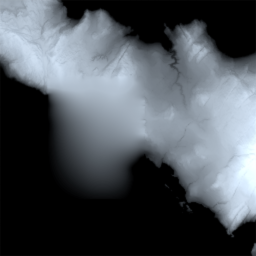

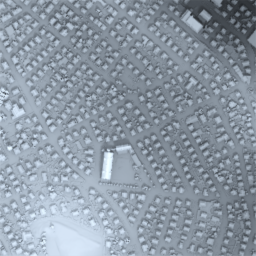

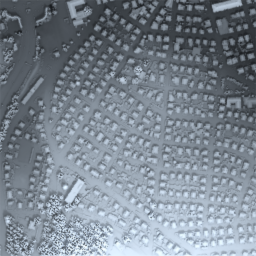

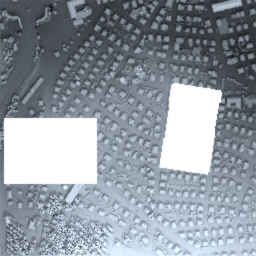

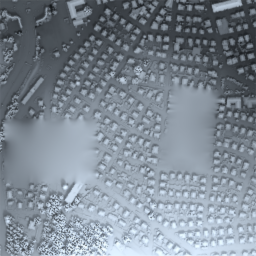

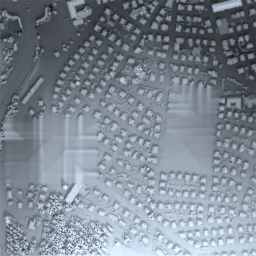

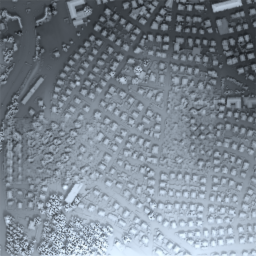

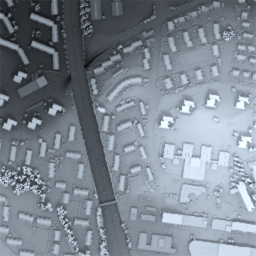

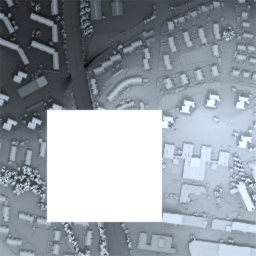

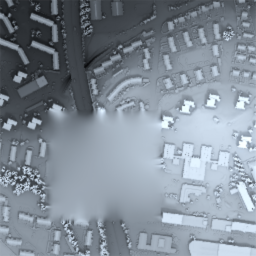

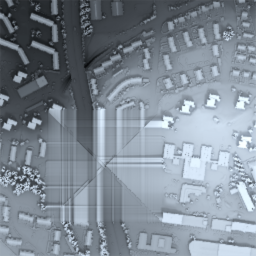

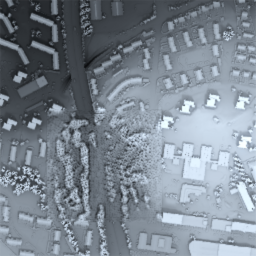

In recent years, advances in machine learning algorithms, cheap computational resources, and the availability of big data have spurred the deep learning revolution in various application domains. In particular, supervised learning techniques in image analysis have led to superhuman performance in various tasks, such as classification, localization, and segmentation, while unsupervised learning techniques based on increasingly advanced generative models have been applied to generate high-resolution synthetic images indistinguishable from real images. In this paper we consider a state-of-the-art machine learning model for image inpainting, namely a Wasserstein Generative Adversarial Network based on a fully convolutional architecture with a contextual attention mechanism. We show that this model can successfully be transferred to the setting of digital elevation models (DEMs) for the purpose of generating semantically plausible data for filling voids. Training, testing and experimentation is done on GeoTIFF data from various regions in Norway, made openly available by the Norwegian Mapping Authority.

Model architecture

Results

BibTex

@article{Gavriil2019,

author={K. {Gavriil} and G. {Muntingh} and O. J. D. {Barrowclough}},

journal={IEEE Geoscience and Remote Sensing Letters},

title={Void Filling of Digital Elevation Models With Deep Generative Models},

year={2019},

volume={16},

number={10},

pages={1645-1649},

doi={10.1109/LGRS.2019.2902222},

ISSN={1558-0571},

month={Oct}

}

Acknowledgements

We adapted the GitHub repository generative_inpainting to the setting of Digital Elevation Models. The open source C++ library GoTools was used for generating the LR B-spline data. Data provided courtesy Norwegian Mapping Authorities (www.hoydedata.no), copyright Kartverket (CC BY 4.0). This project has received funding from the European Union’s Horizon 2020 research and innovation programme under the Marie Skłodowska-Curie grant agreement No 675789. This projected was also supported by an IKTPLUSS grant, project number 270922, from the Research Council of Norway.